An Overview

For nearly a century carbon capture technologies have been used by the oil refining industry to separate carbon dioxide (CO2) from other marketable gasses. Then, in the 1970’s oil producers began injecting carbon dioxide into declining oil wells to boost production, a process known as “enhanced oil recovery” (EOR). Finally, in the 1990s, the Norwegian company, Statoil (now Equinor) began receiving government subsidies to sequester captured carbon underground in an effort to reduce greenhouse gas emissions.

To this day, EOR remains the principal use of captured carbon in the United States, though EOR projects primarily use natural CO2 sources, not CO2 captured at industrial facilities, of which only a tiny amount is sequestered underground. However, as concern about global warming has grown, interest in using carbon capture and storage technology to reduce emissions from otherwise hard-to-decarbonize industries, such as steel making and cement making, has grown. Today, the International Energy Agency reports 839 carbon capture projects globally. But only 51 are described as “operational” with another 44 “under construction”. Most of these are pilot or test projects. The remaining 744 projects are classified as “planned”.

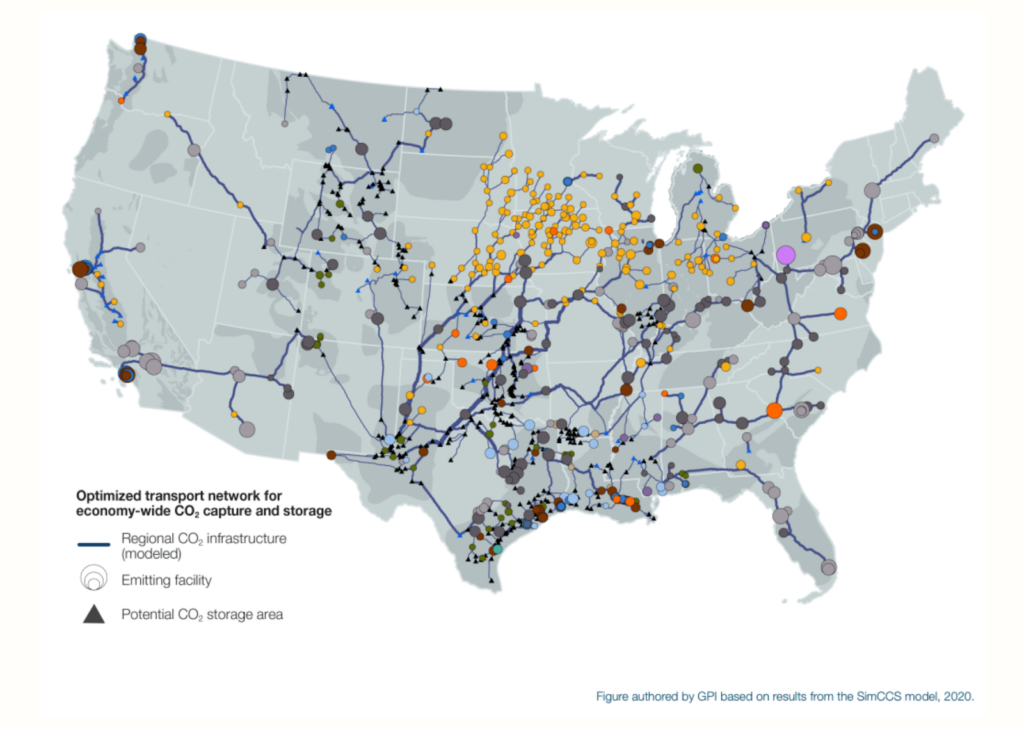

The United States currently has just over 5,000 miles of pipelines, mostly along the Gulf Coast and in the Midwest, devoted to transporting CO2 to locations where it is either used for enhanced oil recovery or sequestered underground. However, carbon capture proponents have a much grander vision of the role the technology could play.

A Much Larger Vision

Some organizations that are influential with the US government imagine a much greater role for carbon capture. One of those organizations, the Great Plains Institute, promotes a vision for carbon capture that would introduce it to a much wider array of industries, including natural gas processing, ethanol production, hydrogen production and, notably, the nation’s 2,200+ coal and gas-fired power generation. This would require tens of thousands of miles of pipelines that would touch nearly every state in the continental US. An analysis conducted by the National Energy Technology Laboratory estimates that this number could be as high as 96,000 miles.

Because of the prevalence of natural gas and coal and gas-fired power in northern Appalachia, much of this construction would likely take place in our region. However, two barriers stand in the way of this vision: cost and carbon capture’s generally abysmal performance history.

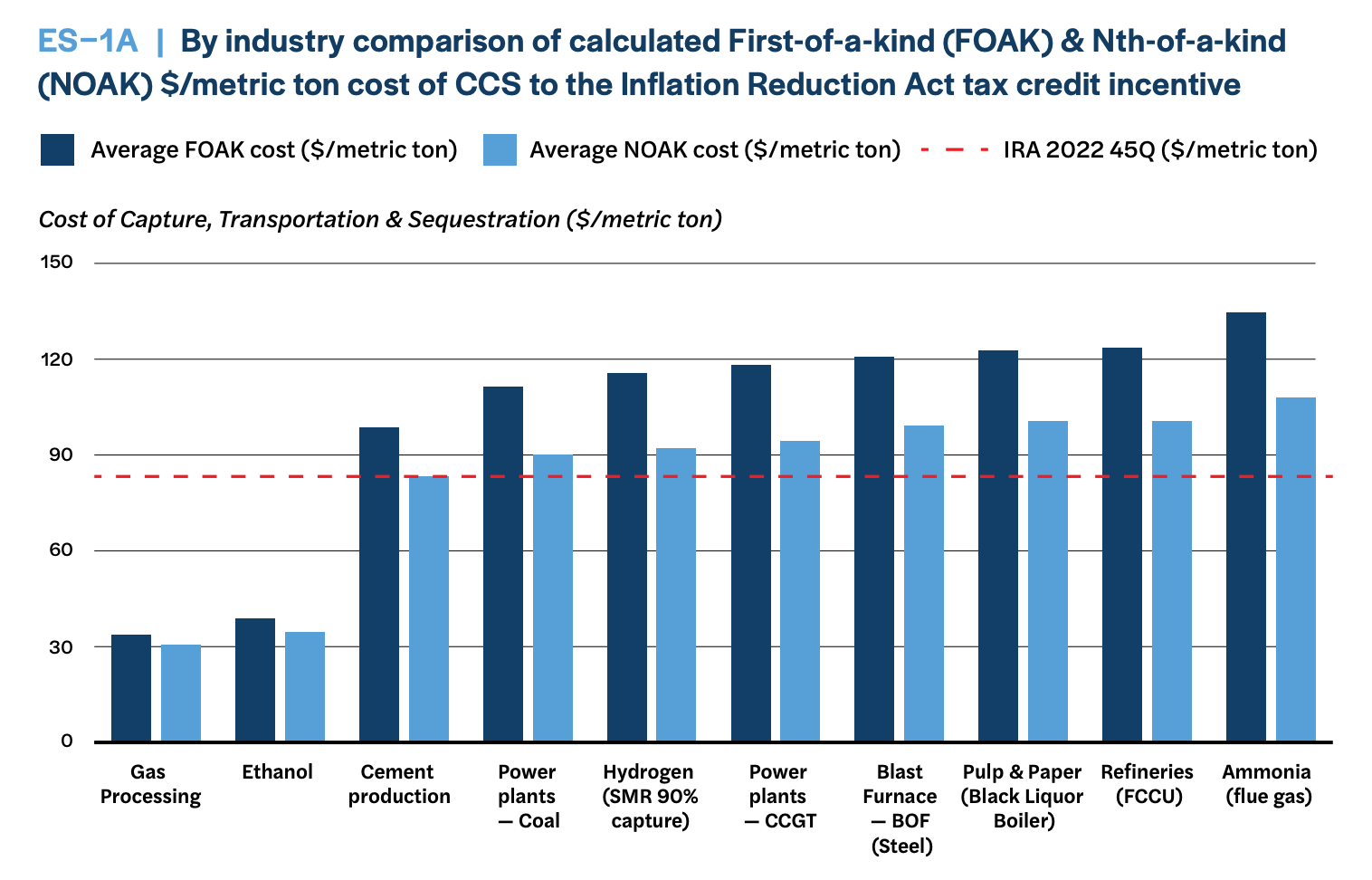

Carbon Capture Makes Everything it Touches More Expensive

Carbon capture technology is expensive to build and to operate. According to recent estimates, it more than doubles the cost of construction for a gas-fired power plant and it would triple the cost of electricity in coal-fired plants. Cost increases of that size might be tolerable in industrial sectors that don’t readily lend themselves to more cost-effective means of decarbonization, but they make no sense in sectors, such as power generation, in which renewables resources such as wind, solar, and battery storage can more effectively reduce or eliminate carbon emissions at little more cost than we’re paying now. Besides, carbon capture doesn’t work very well.

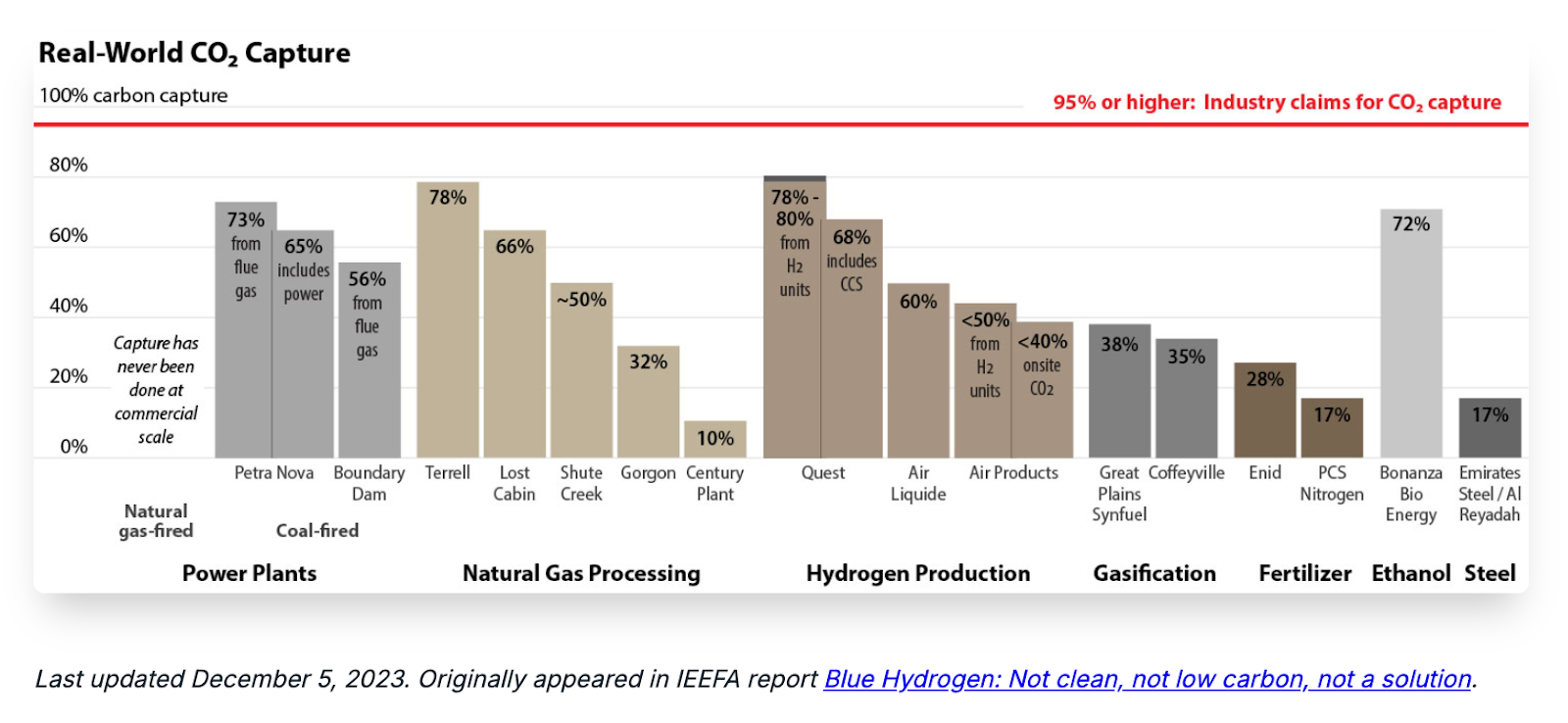

The Institute for Energy Economics & Financial Analysis (IEEFA) regularly tracks the emission reduction performance of US carbon capture projects and has found that few if any projects have ever approached the 90%+ reduction rates claimed by carbon capture proponents.

For that reason, most industries that contemplate deploying carbon capture at scale do not anticipate doing so for another decade or more.

Nonetheless, The Department of Energy is Giving Carbon Capture a Big Push

The Inflation Reduction Act offers what are in many cases absurdly large subsidies for a technology that in many industries is fundamentally uneconomic. At $85 per metric ton, the carbon capture and storage tax credit would enable a coal-fired power plant that provides about $250 million worth of electricity annually and which emits nearly a metric ton of carbon for each megawatt hour, to receive a carbon capture subsidy of nearly $500 million. In other words, we would be paying a power plant twice as much to dig up and bury carbon as we would for generating electricity.

Gas-fired power plants emit a little less than half as much CO2 as coal-fired plants. Consequently the subsidy would be less, but still absurdly large. The same kind of economics would play out across other industries as well and, while the amount of the cost increases would vary, in every instance they would go up.

This past January, the Energy Futures Initiative, a pro-carbon capture think tank, determined that the IRA subsidy of $85/metric ton, although generous, is still insufficient to induce most industries to deploy carbon capture. That means that, if these industries, which are responsible for many products and services that we purchase, ultimately choose or are forced to deploy carbon capture, we will ultimately absorb the added cost.

So the question isn’t whether prices will be forced up . . . they will be. Nor is there a question about who will pay. The answer is, you. The only question is how you will pay – through your utility bills, through higher prices at the store, or through your taxes. And the answer is almost certainly, all of the above.